Make your AI better at data work with dbt's agent skills

Community-driven creation and curation of best practices is perhaps the driving factor behind dbt and analytics engineering’s rise - transferrable workflows and processes enable everyone to create and disseminate organizational knowledge. In the early days, dbt Labs’ Fishtown Analytics’ dbt_style_guide.md contained foundational guidelines for anyone adopting the dbt viewpoint for the first time.

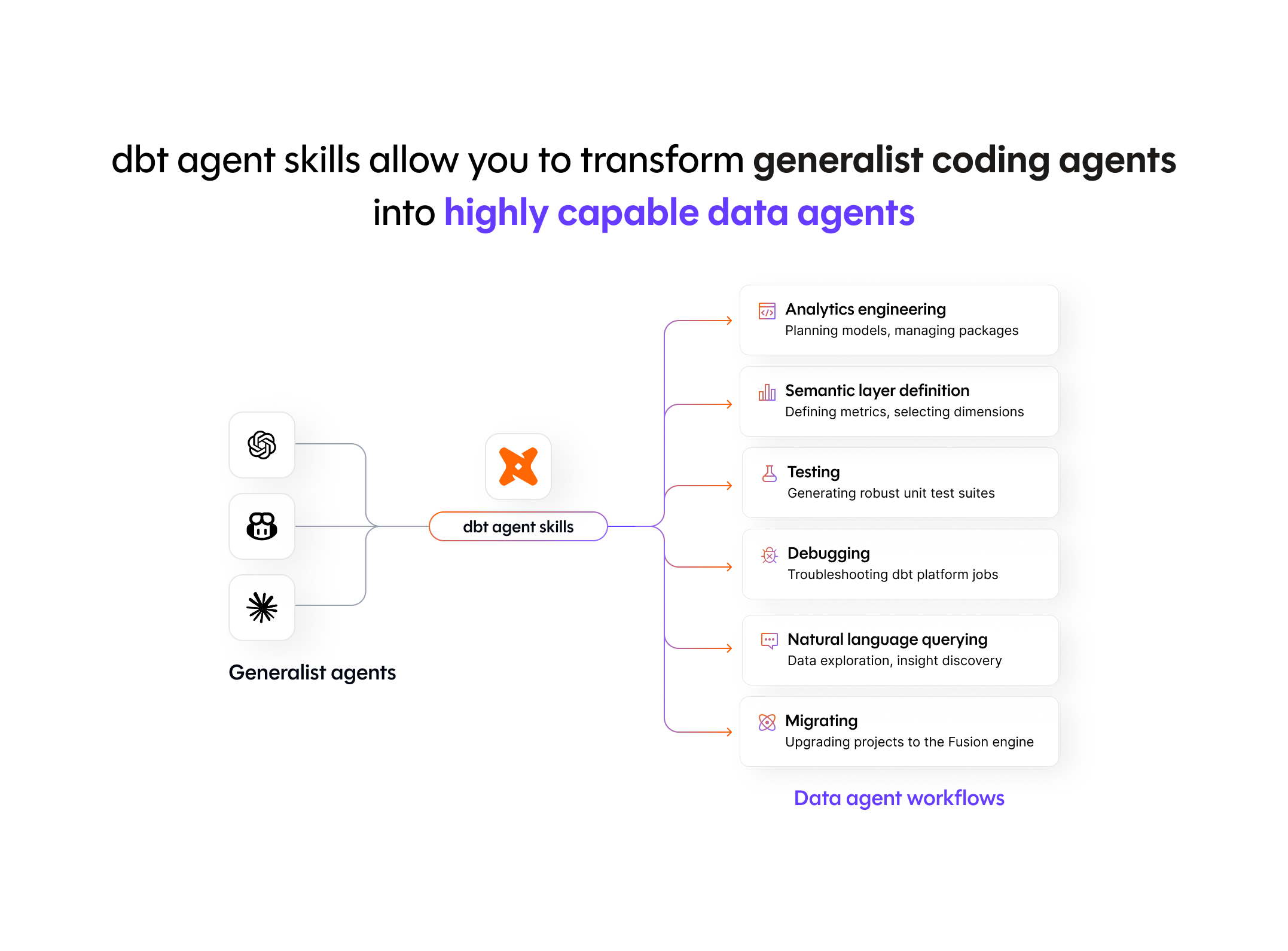

Today we released a collection of dbt agent skills so that AI agents (like Claude Code, OpenAI's Codex, Cursor, Factory or Kilo Code) can follow the same dbt best practices you would expect of any collaborator in your codebase. This matters because by extending their baseline capabilities, skills can transform generalist coding agents into highly capable data agents.

These skills encapsulate a broad swathe of hard-won knowledge from the dbt Community and the dbt Labs Developer Experience team. Collectively, they represent dozens of hours of focused work by dbt experts, backed by years of using dbt.